How might we bridge the gap between technical possibility and human connection in real-time telepresence?

Project Overview

TeleWindow is an ongoing research initiative led by Michael Naimark that reimagines video calls through the lens of spatial computing. The system creates a "window" between spaces, enabling natural eye contact and spatial presence through real-time 3D reconstruction.

Current System

Capture

Four-camera array for complete volumetric coverage

Processing

Real-time point cloud fusion and perspective correction

Display

Eye-tracked stereoscopic output via SeeFront

Research Challenges

Visual Coherence

Despite using static transformation matrices calculated through ICP registration, perfect point cloud alignment remains elusive. The challenge intensifies with closer objects, where perspective distortion and sensor noise become more pronounced.

Volumetric Representation

Point clouds, while computationally efficient, lack true volumetric properties. Without associated geometry, they cannot fully participate in virtual environments through lighting interactions or physical behaviors.

Research Approaches

1. Surface Reconstruction

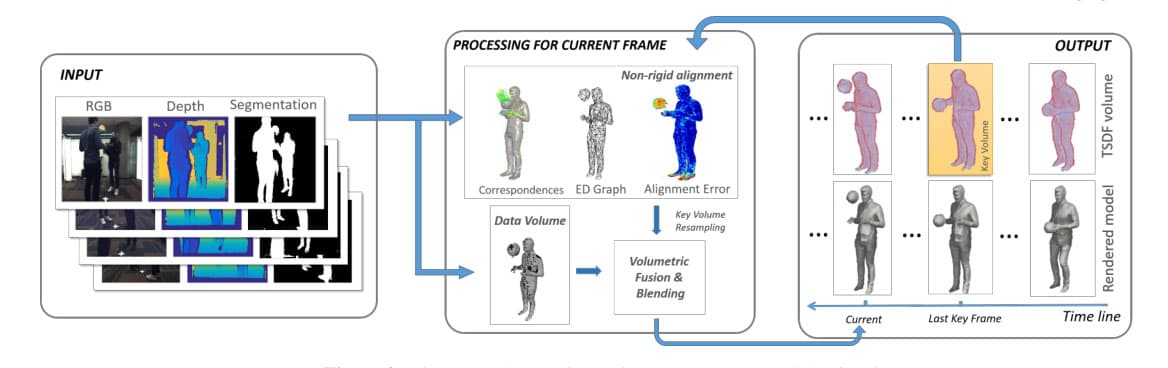

While state-of-the-art systems like Microsoft's Fusion4D achieve high-quality reconstruction, their approaches rely on proprietary algorithms and different camera configurations. Our unique setup, with closely-positioned cameras, required alternative solutions.

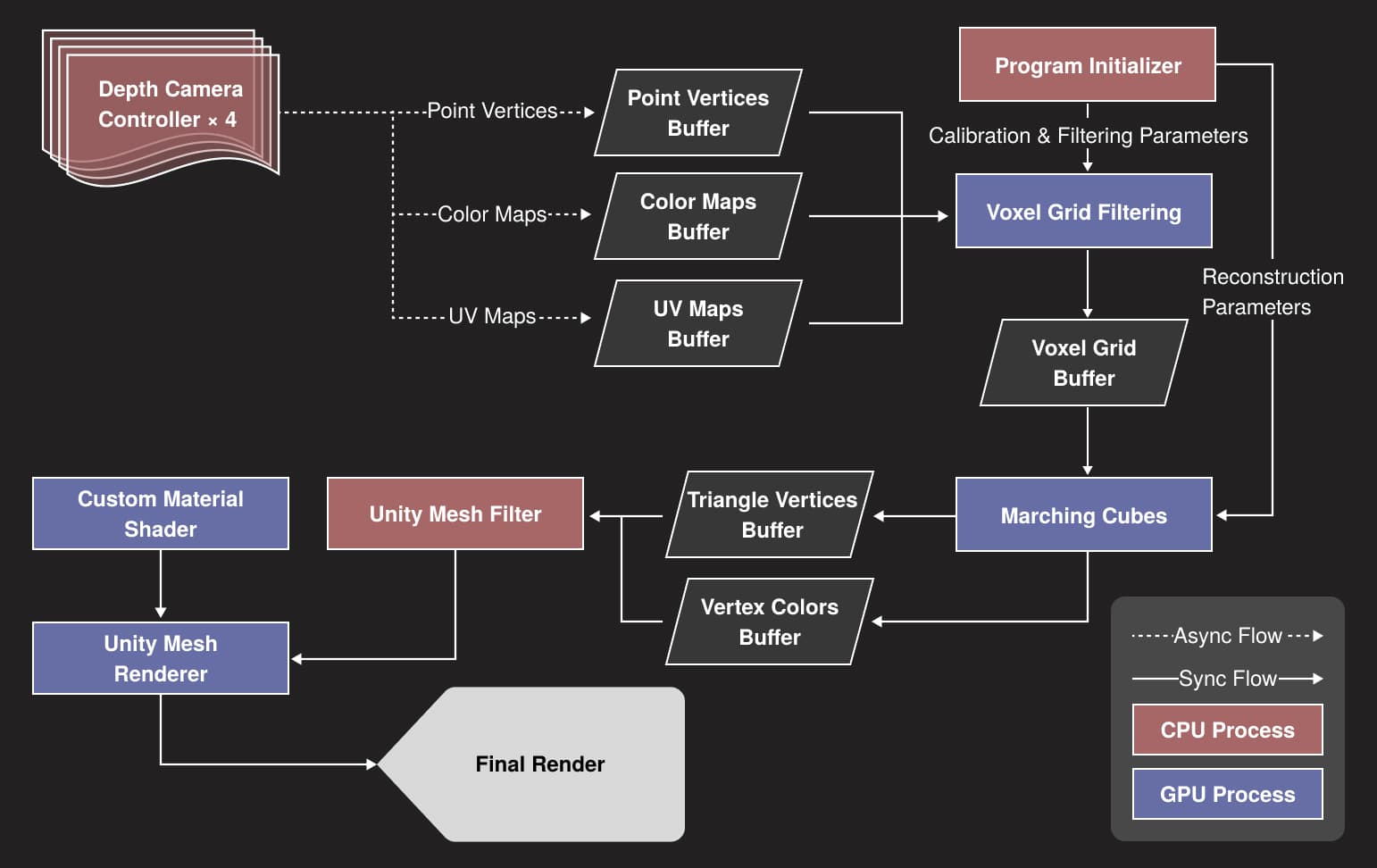

We explored Marching Cubes as a GPU-accelerated alternative, which previous research suggested could achieve real-time performance with our data scale.

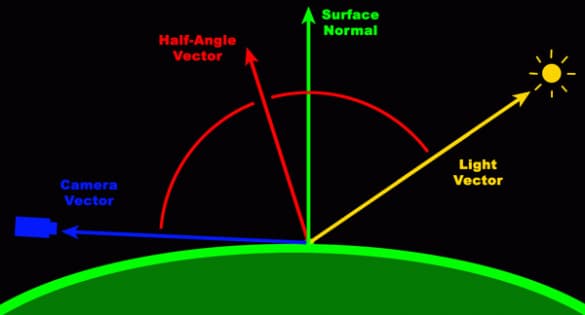

2. Normal-Based Relighting

Challenge

Adding realistic lighting to point clouds without full reconstruction

Approach

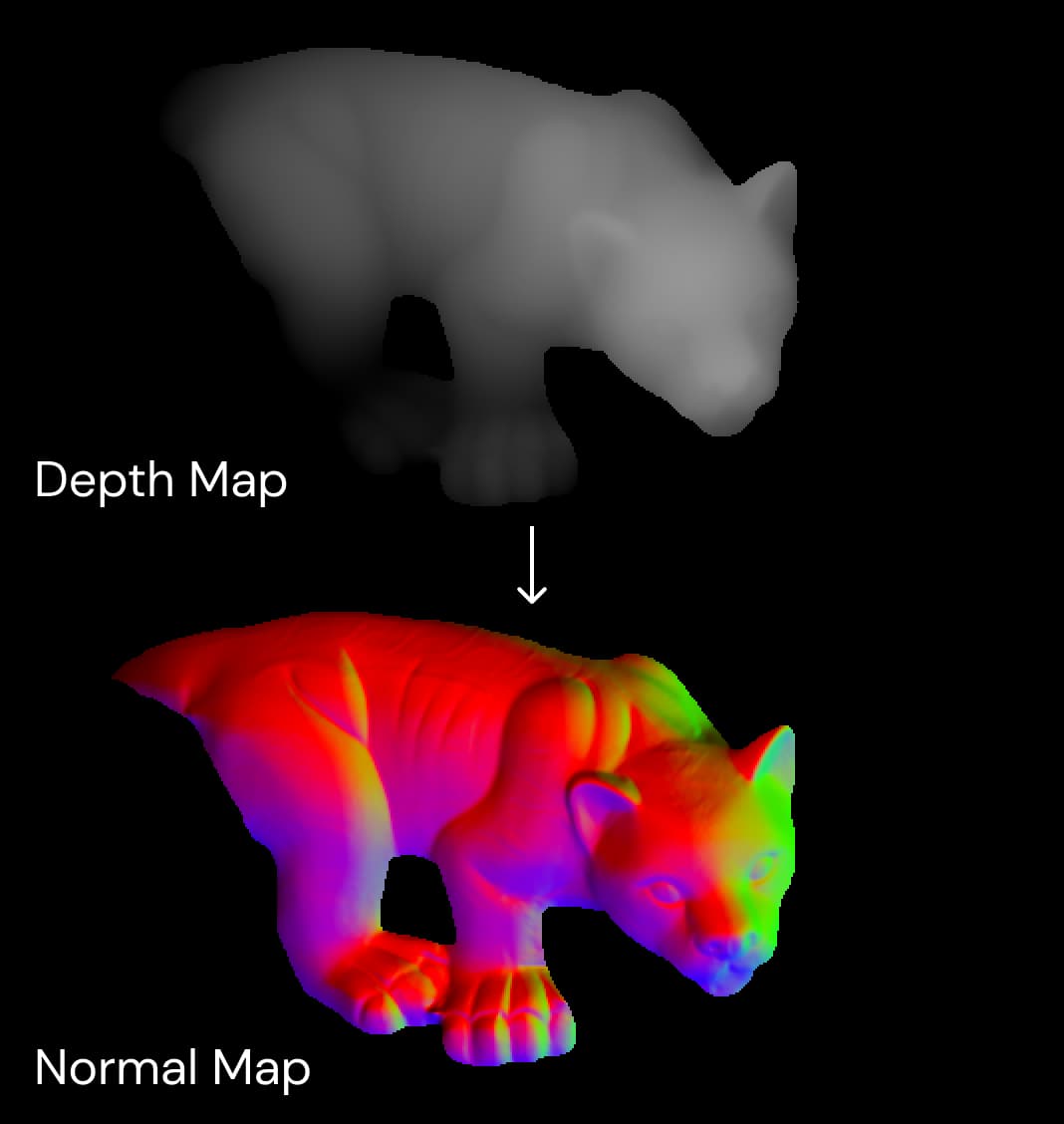

Generating normal vectors from depth maps for lighting calculations

Implementation

Custom shaders for real-time normal calculation and lighting

Implementation Results

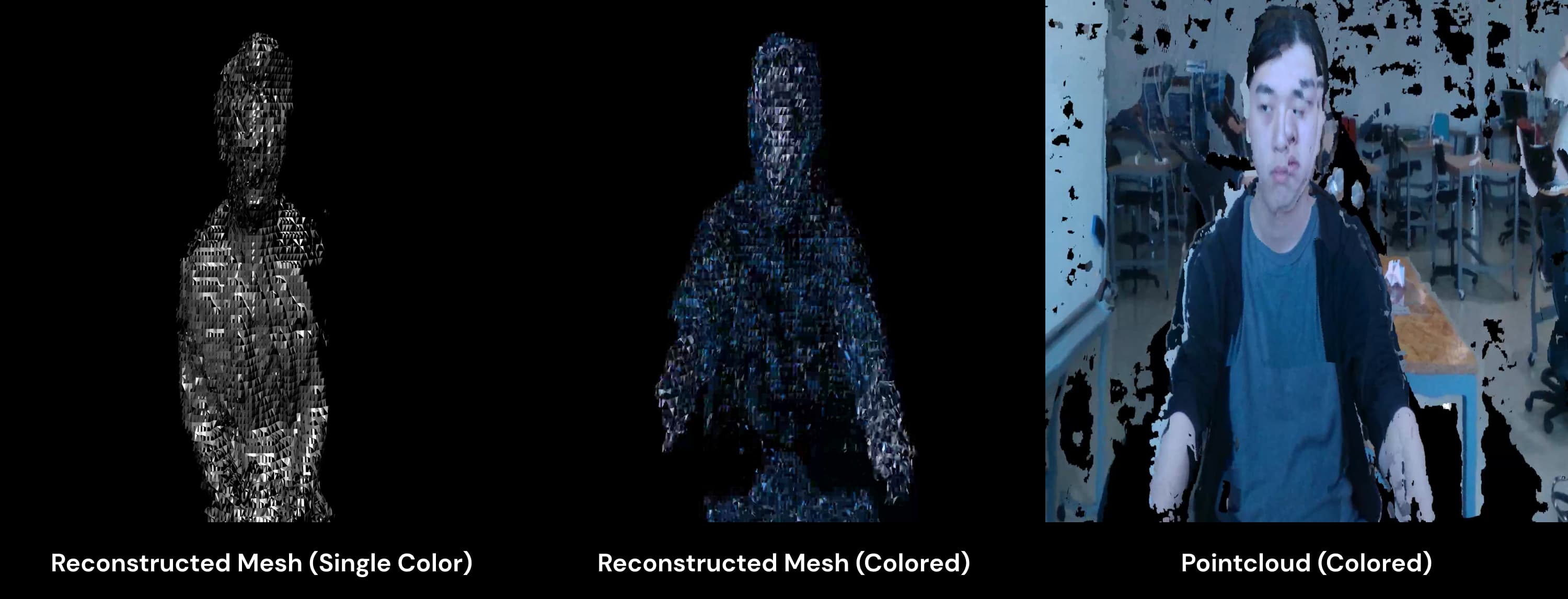

Surface Reconstruction

Our implementation, while limited by memory constraints to 256³ voxel resolution, revealed important insights. The reconstructed meshes offered improved rendering performance and better integration with virtual environments, despite lower visual fidelity.

Real-time Relighting

Research Insights

Technical Boundaries

Simple solutions for visual artifacts remain elusive

Alternative Approaches

Point cloud post-processing offers promising feature possibilities

Future Direction

Focus on novel user experiences over perfect visual fidelity

Future Directions

I'm currently exploring these insights through a new project using Looking Glass Factory's holographic display technology, continuing to investigate the intersection of technical capability and human experience in telepresence systems.

Acknowledgments

Special thanks to Michael Naimark and Dave Santiano for their guidance and mentorship throughout this research journey.